Beginning with Machine Learning - from a beginner

Describe how I'm starting with machine learning, beginning with CPU vs GPU computing

4/27/20241 min read

CPU vs GPU for Machine-Learning Training

A hands-on guide for absolute beginners

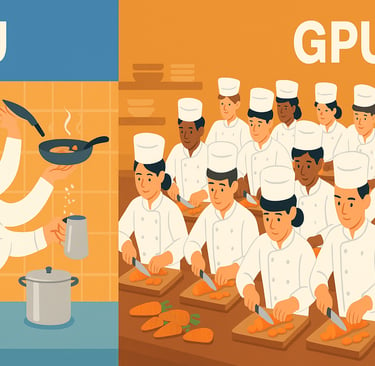

0. A kitchen-table analogy

CPU (Central Processing Unit)

Imagine a master chef with four to sixteen very talented hands. Each hand can do almost any cooking task—chop, sauté, season—one after another with great flexibility.GPU (Graphics Processing Unit)

Now picture hundreds of junior cooks, each trained to do just one simple chopping motion. They can’t fry or plate, but if you give them a mountain of carrots they’ll finish the job in seconds.

In machine learning, “carrots” are the tiny math operations (add, multiply) that turn data and model weights into predictions. When your recipe needs millions of identical chops at once—think image classification or large language models—the GPU wins. When your dish involves lots of different steps—loading data, cleaning text, running small models—the CPU often holds its own.

1. What a CPU does best

Typical CPU-only wins

Logistic regression on 100 k rows

Random-forest feature screening

Training a small LSTM to predict weekly sales in Excel-sized data

2. What a GPU does best

Typical GPU wins

ResNet-50 image classifier on 1 M photos

Transformer language model fine-tuning

Reinforcement-learning agents needing millions of simulated frames

3. Behind the speed numbers (conceptual benchmark)

4. Hidden costs & gotchas

5. How to choose in practice

Estimate model math

Millions of dense matrix multiplies per epoch? → try GPU

Mostly branching logic / decision trees? → CPU

Check dataset size

Fits in GPU RAM? Good.

If not, do you stream batches from disk? Might erase GPU benefit.

Prototype on CPU first

Verify code correctness and baseline accuracy.

Profile

time your training loop or use built-in profilers.

If GPU is < 50 % utilized, CPU may be fine.

Consider cost & availability

Cloud GPU: $0.50–$4 /hr.

Local laptop: free CPU cycles.

For a school project, CPU may finish overnight and cost $0.

6. Quick cheat-sheet table

7. Take-aways

CPUs excel at flexible, branching workloads and modest-sized models.

GPUs shine when your training loop is a giant pile of identical math operations.

Always profile before you pay—sometimes smarter data handling beats fancier hardware.

Start small, prove your idea on a CPU, then scale up to a GPU when math and data justify the jump.

Jared Vogler

industrial engineer

Location

Charleston, South Carolina